Decode Data. Deploy AI. Dominate Markets.

Your compass in an AI and data-driven world—boosting productivity and growth with smart data and AI solutions.

2024

%

Data

Storage Cut

with Data

Deduplication

More Profitable

with Data

Management

Gain More

Customers

with Data

Strategy

2024

Data-Driven Insights

%

Data

Storage Cut

with Data

Deduplication

More Profitable

with Data

Management

Gain More

Customers

with Data

Strategy

Certifications

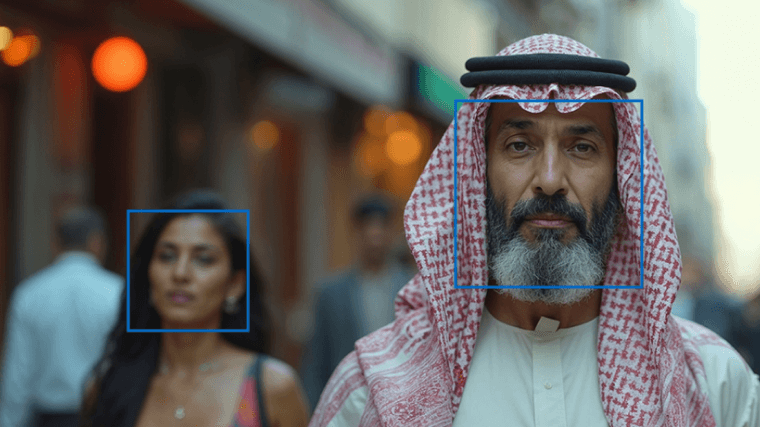

Some of the Problems That We Solve

Problem

My data is dispersed and disorganised

Solution

Services

Technologies: Snowflake, Amazon Redshift, AWS Glue, AWS Lambda, & AWS Redshift

Problem

I can’t get real-time insights from my data

Solution

Services

Technologies: Azure Stream Analytics, & Power BI

Problem

My tasks are manual and time-consuming

Solution

Services

Technologies: UiPath, & Flowise

Problem

My data storage is costly and inefficient

Solution

Services

Technologies: AWS S3, MinIO, & Clickhouse

What Our Clients Say

We needed a world class, fast and agile full stack data team – Data Pilot have been that and so much more.

Mark Patchett

Founder at Growth Shop

Services Provided

Data Engineering

Advanced Analytics and BI

Data Pilot is deep and professional. They have delivered accurate testing results. The team is professional and maintains communication through virtual meetings, email, and messaging apps.

Stephen Youdeem

CEO RYIS

Services Provided

AI and ML

MLOps

Data Pilot is helping Lulusar leverage data to create the optimal experience for its customers & business. Together, we are pursuing in-depth analytics and predictive modeling to optimize how we grow & retain customers.

Saif Hassan

Director Lulusar

Services Provided

Data Engineering

ETL

Data Visualisation

Data Strategy

Machine Learning

Forecasting, & Marketing Analytics

In Pakistan Single Window, we often work with external partners and consultants, but collaborating with Data Pilot has been a truly transformative experience. They helped PSW craft its enterprise data management strategy that is currently being implemented by us. Strongly recommend Data Pilot for any organization that is seeking innovative, impactful solutions to modernize their data management practices.

Aftab Haider

CEO PSW

Services Provided

Data Strategy

Data Management

Their knowledge of data analytics space is at an expert level.

Dennis Hecht

Chief Product & Analytics Officer, 7 Knots Digital

Services Provided

Data Engineering

Data Analytics

BI

We have partnered with Data Pilot on quite a few of our projects. This strategic relationship was not only key to our success but also resulted in satisfied clients.

Debbie Wallace

PM Assistant at DevWise

Services Provided

AI & ML

MLOps

Their level of responsiveness, expertise, and dedication to our urgent timeline was exemplary.

David Stier

Co-Founder, Social Media Analytics Company

Services Provided

AI & ML

Data Engineering

Advanced Analytics and BI

Your Industry, Reinvented with Data Pilot

No matter your industry, we tailor our solutions to help you solve your unique challenges, ensuring you lead your industry with confidence

Success Stories

Success Stories

Blogs

Ready to Turn Your Data Into Actionable Insights?

Your transformation starts here.